Time:2025-12-08

A research team led by WANG Zuoren from the Institute of Neuroscience, Center for Excellence in Brain Science and Intelligence Technology, Chinese Academy of Sciences, in collaboration with LIU Chenglin from the Institute of Automation, Chinese Academy of Sciences, has proposed a fundamentally new learning paradigm inspired by the biological brain.

The findings were published in the journal Neurocomputing on December 5, 2025, under the title: “Towards Biologically Plausible DNN Optimization: Replacing Backpropagation and Loss Functions with a Top-Down Credit Assignment Network”.

The study demonstrates—for the first time—that a biologically inspired Top-Down Credit Assignment Network (TDCA-net)can simultaneously replace loss functions and backpropagation in deep learning. Across a wide range of non-convex optimization, vision, reinforcement learning, and robotic control tasks, TDCA-net achieves faster convergence, higher stability, well generalization, and reduced computational cost, offering a promising new route toward next-generation brain-like AI.

For decades, the success of artificial intelligence has relied on the classical training recipe of explicitly defined loss functions plus backpropagation. Yet a long-standing question persists: the human brain does not use backpropagation—so how does it learn so efficiently? And can artificial systems learn effectively without it?In neuroscience, studies show that the brain lacks:explicit numerical loss functions,precise gradient-based error propagation, andsymmetric, layer-by-layer credit assignment pathways.Instead, top-down regulatory signals originating from high-level cognitive areas—such as the prefrontal cortex and cingulate cortex—appear to play a central role in guiding learning throughout the cortex.This suggests that biological learning may rely on an endogenous, top-down modulation mechanism fundamentally different from artificial backpropagation.

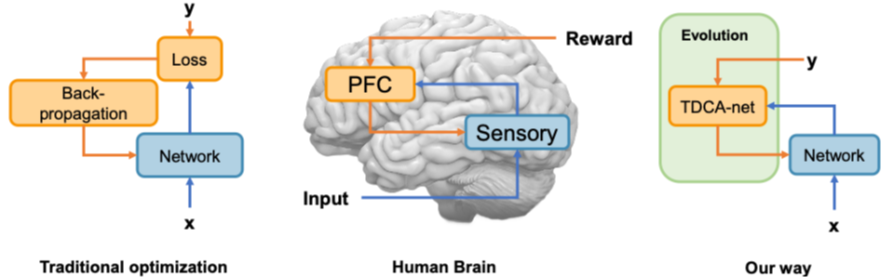

Figure 1: Comparison of different learning approaches: traditional optimization, biological brains, and our TDCA network approach.

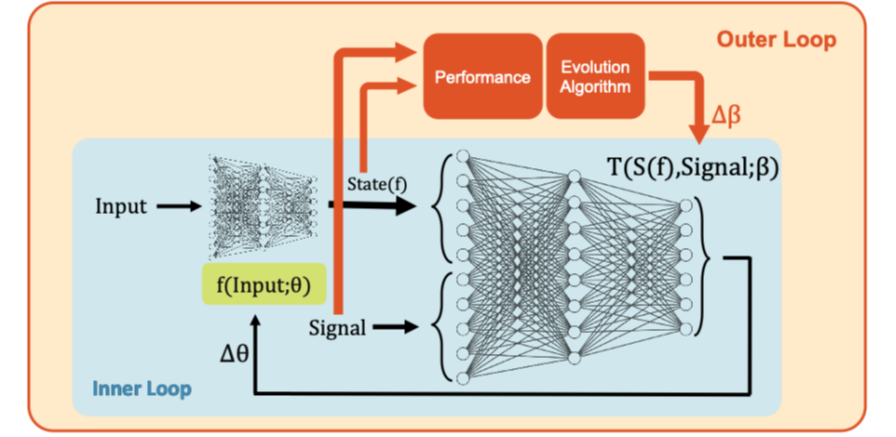

Motivated by these insights, the authors propose a framework consists of two components: 1) a bottom-level task network, responsible for performing the actual task; 2) a top-down credit assignment network, responsible for generating learning signals.Unlike traditional methods that compute gradients through an explicit loss function and backpropagation, the TDCA-network directly generates the signals used to update the task-network parameters, entirely replacing both the loss function and the backpropagation process.By mimicking the hierarchical control structure of the human brain, TDCA-network provides a learning mechanism that is structurally more aligned with biological cognition.

Fig. 2. Schematic diagram of the top-down learning framework.

The research team validated TDCA-network on a wide range of representative tasks: 1)Non-convex optimization, where standard algorithms often become trapped in local minima; 2)Image classification, including MNIST and Fashion-MNIST; 3)Reinforcement learning, including CartPole, Pendulum, BipedalWalker, and MetaWorld robotic tasks.Across these tasks, TDCA exhibited several key advantages: 1) Much faster convergence and greater ability to escape local minima; 2) Stronger robustness to initialization and hyperparameter choices; 3)Improved generalization and transferability to new tasks; 4) Overall better performance than backpropagation-based training and multiple biologically plausible learning baselines.

Notably, in reinforcement learning and robotic control, a single TDCA top-down module can guide learning across multiple distinct tasks—resembling the brain’s ability to apply general learning strategies across contexts.The TDCA-network framework represents an important step toward bridging deep learning and biological learning mechanisms. Its broader implications include: 1) providing a new training paradigm for next-generation neuromorphic and brain-inspired AI systems; 2) offering a low-power, hardware-friendly learning mechanism suitable for intelligent chips; 3) supplying a computational model for understanding real neural credit assignment; 4) enabling more efficient learning strategies for robots and reinforcement learning systems.In the long run, TDCA opens a promising pathway toward artificial systems that learn more like the brain—without explicit losses, without backpropagation, and with more flexible, generalizable strategies.

Dr. CHEN Jianhui is the first author of this paper. Professors WANG Zuoren and LIU Chenglin are the corresponding authors, and Professor YANG Tianming has made significant contributions to the paper. This work was supported by the Strategic Priority Research Program of the Chinese Academy of Sciences and the Science and Technology Innovation 2030 Major Project.

附件下载:

附件下载: