Time:2019-10-09

In a study published in Neuron, Dr. GU Yong’s lab at the Center for Excellence in Brain Science and Intelligence Technology (Institute of Neuroscience) of the Chinese Academy of Sciences, collaborating with Prof. Alexandre POUGET from the University of Geneva, Switzerland, provided new insights into the neural mechanisms underlying optimal multisensory decision making.

To make effective decisions, animals always need to integrate evidence across sensory modalities and over time, a process known as multisensory decision making. This problem is conceptually complex, because the optimal solution requires various pieces of sensory evidence to be weighted in proportion to their respective time-varying reliability, which is typically not known in advance.

Despite this complexity, theoretical studies have shown that the optimal solution can be achieved by simply summing neural activity across modalities and time without the need for any form of reliability-dependent synaptic reweighting, as long as sensory inputs are represented with a type of code known as invariant linear probabilistic population codes (ilPPC). While this theoretical possibility had been raised before, it had never been previously tested experimentally.

In this study, the researchers combined experimental and computational approaches to address this question in the context of self-motion perception.

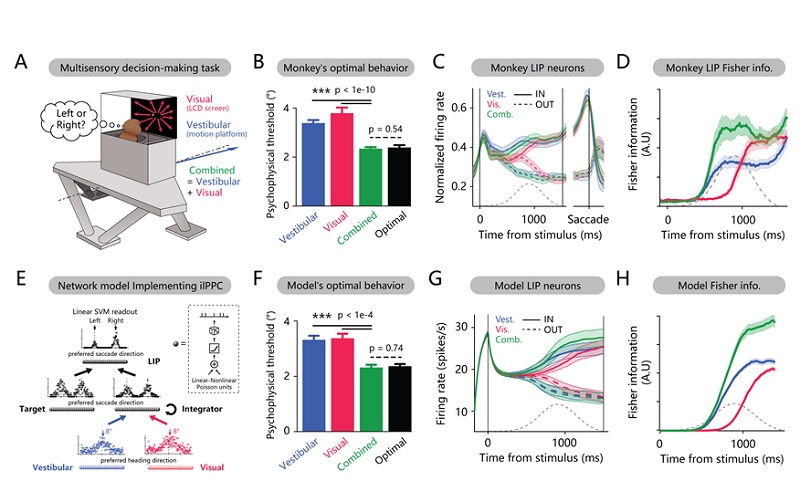

They first recorded data from single neurons in the macaque lateral intraparietal area (LIP) when the animals were optimally performing a visual-vestibular heading discrimination task. The results showed that, under unimodal conditions, LIP neurons appear to simply integrate over time the sensory evidence related to velocity for the visual input and acceleration for the vestibular input, respectively, whereas in the cue-combined condition, these same neurons compute linear combinations of their visual and vestibular inputs. In addition, the shuffled Fisher information in the combined condition is larger than in single-cue conditions.

To test whether the self-motion perception system is consistent with the ilPPC framework, the researchers performed theoretical estimations based on experimental data and built a neural network model that implements the ilPPC framework. The results obtained showed that linear combinations of temporally integrated inputs are indeed sufficient for optimal integration across time and modalities, without any need for reliability-dependent reweighting at the synaptic level. More importantly, neural activities in such a network model were consistent with real LIP responses.

Taken together, this study provides the first neural evidence in support of the ilPPC theory for optimal multisensory decision making, which bridges the long-lasting gap between the fields of multisensory integration and decision making.

This work entitled “Neural Correlates of Optimal Multisensory Decision Making under Time-Varying Reliabilities with an Invariant Linear Probabilistic Population Code” was published online in Neuron on October 10, 2019. HOU Han is the first author; Dr. Yong GU and Dr. Alexandre POUGET are the corresponding authors. This study was supported by grants from both China and Switzerland.

Figure 1. (A) Schematic drawing of the experimental setup. (B) The monkey optimally integrated vestibular and visual evidence in the multisensory decision-making task. (C) Population average of normalized firing rate of monkey LIP neurons. (D) Shuffled Fisher information of the LIP population. (E) The neural network model that implements the ilPPC framework for vestibular-visual multisensory decision making. (F) The model performed the task optimally, just like the monkey (compare B and F). (G) Neural activities in such a network model were also similar to the real LIP responses (compare C and G). (H) The shuffled Fisher information of the model’s decision layer was also similar to the LIP population (compare D and H). (Image by GU Yong)

Contact:

GU Yong: guyong@ion.ac.cn

附件下载:

附件下载: